Force-Modulated Visual Policy for Robot-Assisted Dressing with Arm Motions

Abstract

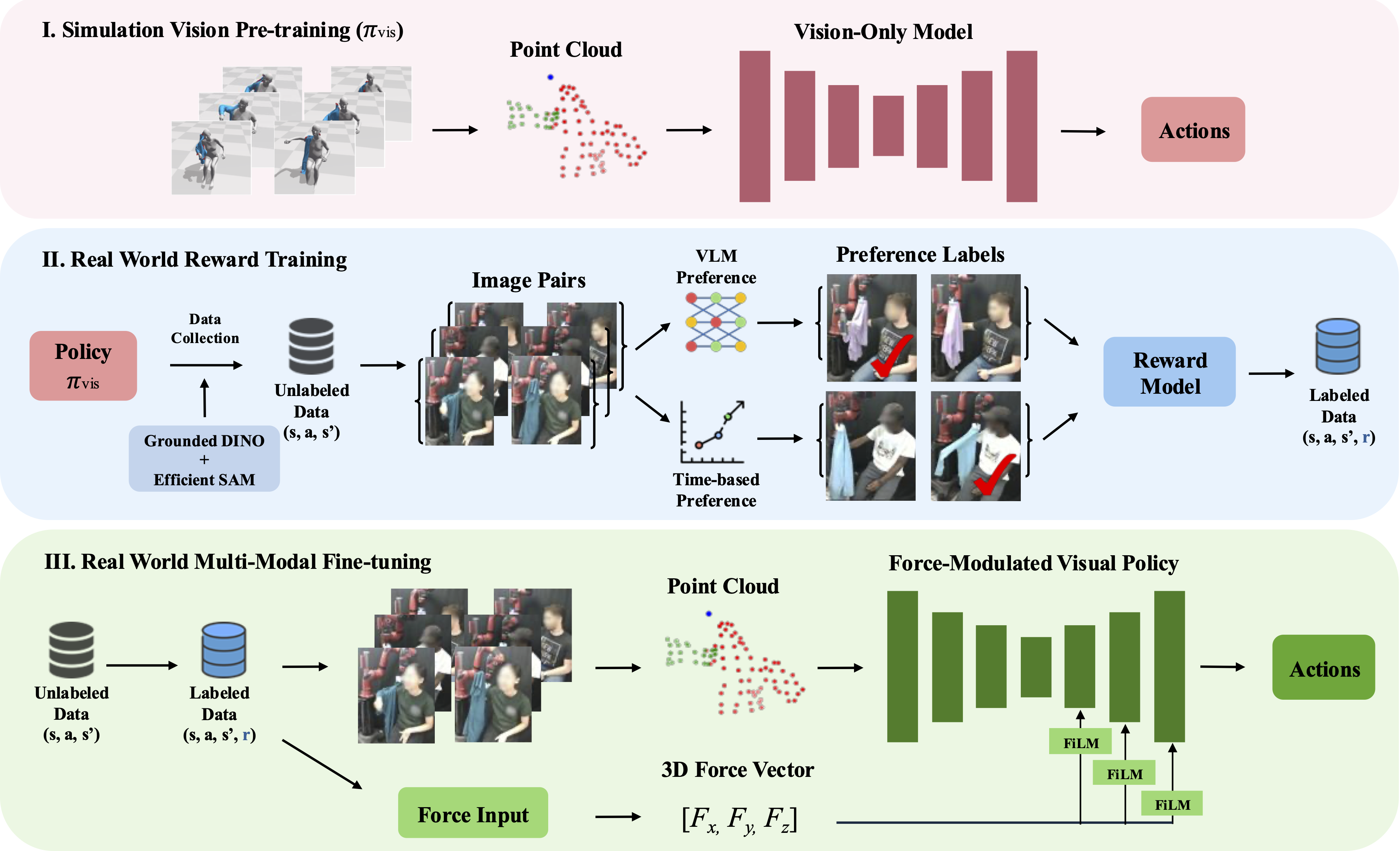

Robot-assisted dressing has the potential to significantly improve the lives of individuals with mobility impairments. To ensure an effective and comfortable dressing experience, the robot must be able to handle challenging deformable garments, apply appropriate forces, and adapt to limb movements throughout the dressing process. Prior work often makes simplifying assumptions—such as static human limbs during dressing—which limits real-world applicability. In this work, we develop a robot-assisted dressing system capable of handling partial observations with visual occlusions, as well as robustly adapting to arm motions during the dressing process. Given a policy trained in simulation with partial observations, we propose a method to fine-tune it in the real world using a small amount of data and multi-modal feedback from vision and force sensing, to further improve the policy’s adaptability to arm motions and enhance safety. We evaluate our method in simulation with simplified articulated human meshes and in a real world human study with 12 participants across 264 dressing trials. Our policy successfully dresses two long-sleeve everyday garments onto the participants while being adaptive to various kinds of arm motions, and greatly outperforms prior baselines in terms of task completion and user feedback.

Overview Video

Model Architecture

Real-World Evaluation Study

Our evaluation study involves 12 participants, two new garments, and seven arm motions. Each participant completes 22 trials: 14 using our method and 4 each for the two baseline methods. Our method generalizes robustly across all seven arm motions, including those not seen during training (denoted with *). Videos are presented at 2x speed during the participant's arm motion and 6x speed otherwise.

Use Phone *

Rub Face *

Wave *

Scratch Head

Lower Arm

Receive Bottle

Improvise *

Comparison with Baselines

In the results shown here, our method is presented in the left column, while the middle and right columns show the two baselines. Our method consistently succeeds in dressing the garment onto the participant's shoulder, whereas the baselines often fail to move past the upper arm. Arm motions not seen during training are denoted with *. Videos are presented at 2x speed during the participant's arm motion and 6x speed otherwise.

Rub Face *

Use Phone *

Receive Bottle

Rub Face *

Lower Arm

Policy Adaptibility and Continuous Robot Motion

Adaptibility to Different Arm Motions

We show comparisons across trials to highlight the policy's adaptability to different arm motions. Rather than waiting passively, the policy continuously adjusts and makes progress throughout dressing.

In Trial A, the participant extends their arm downward, leaving it mostly straight after the motion. As a result, the robot pulls the garment directly towards the shoulder without turning behind the arm. In contrast, in Trial B, the robot pulls the garment further behind the elbow to make a broader turn, adapting to the participant's more bent arm posture.

In Trial A, the participant keeps their forearm bent sharply while scrolling on their phone. To avoid snagging on the elbow, the robot moves on the outer side of the arm, making a wider turn around the elbow. In Trial B, the participant extends their arm slightly forward, positioning the elbow ahead of the shoulder. As a result, the robot takes a more direct path and begins aligning the garment with the shoulder earlier.

In Trial A, the participant holds their arm in a higher, L-shaped position. In response, the robot pulls the garment further behind the elbow, making a bigger turn to prevent the garment from snagging on the elbow. In Trial B, the participant extends their arm to the side and moves it up and down several times. The robot responds by shifting toward this sideward arm position. Notably, when the participant retracts their arm into a bent position, the robot quickly adapts and successfully places the garment on the participant’s shoulder.

Adaptibility to Different Garments

These examples highlight that the policy adapts to different garment properties. Compared to the jacket, the plaid shirt has wider shoulder openings, which exert less force on the participant as it is pulled onto the arm. In response, for some arm motions, the policy pulls further behind the person's arm when dressing with the plaid shirt. This behavior helps avoid snags from small turns and takes advantage of the wider openings to reduce applied force. In contrast, for the jacket—where tighter sleeves require more precision—the policy follows a more standard dressing trajectory.

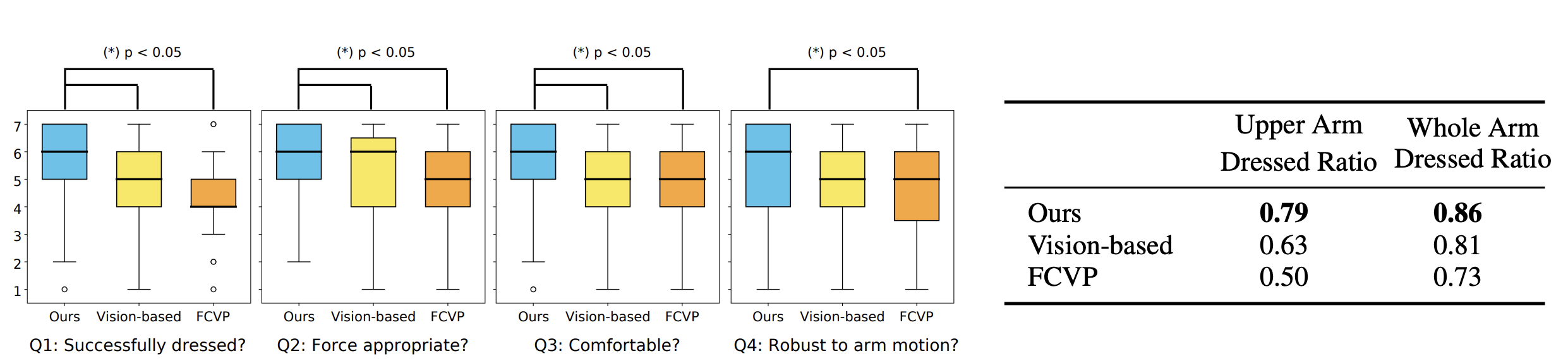

Real-World Quantitative Results

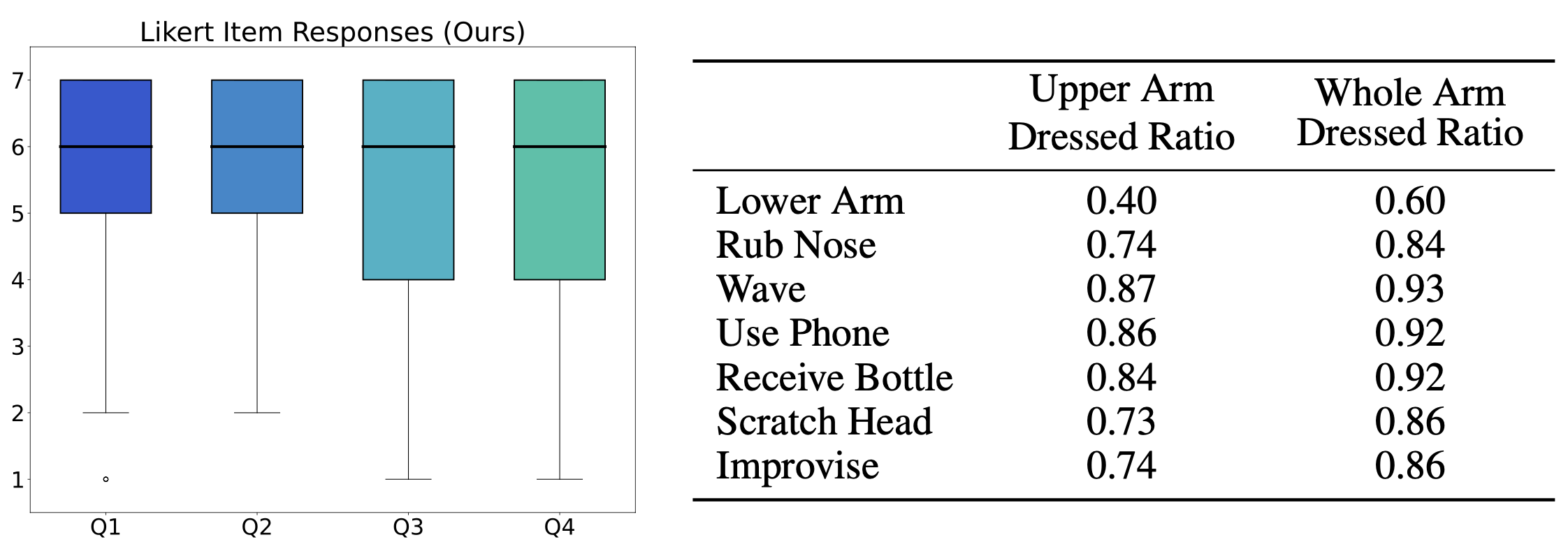

We compare our method against the two baselines on the 48 trials where all methods are evaluated on the same arm motions and garments. As shown in the right table in Figure 2, our method outperforms both baselines in terms of arm dressed ratios. The Likert item responses on the left show that participants generally agree that our method provides a better dressing experience.

Simulation Evaluation Study

In simulation, we generate four body sizes—small, medium, large, and extra large—by varying arm length and radius. We define 14 arm motions by executing seven distinct motions and their reversed versions. Additionally, we select three garments with varying sleeve widths and lengths. Below, we present a subset of the body size, arm motion, and garment combinations.

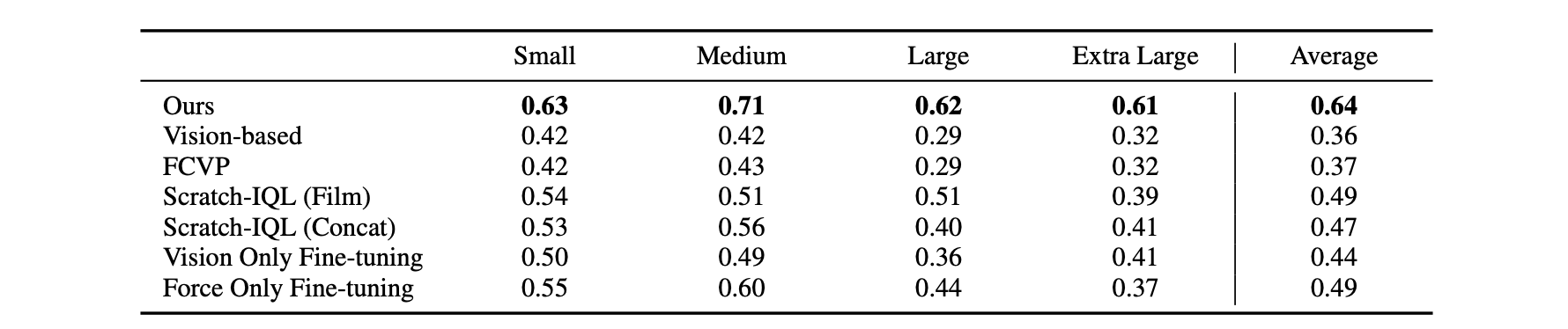

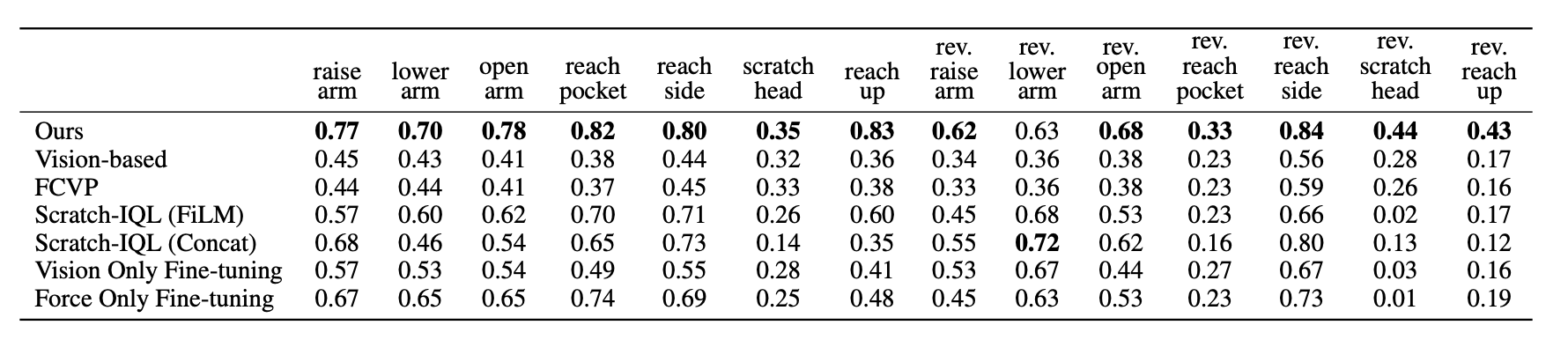

Simulation Quantitative Results

Our method achieves the highest upper arm dressed ratio on 13 of 14 arm motions and across all body sizes, outperforming the baselines by 0.15-0.28. Notably, while all other methods show clear performance degradation as body size increases from Medium (the training body size) to Large and Extra Large, our method maintains consistent performance across all three unseen body sizes.